In-house calibration app that may be customized for arbitrary scenes. It's mainly used during the setup of the tracking system, but its infrastructure also allows the calibration of broadcasting camera highlights.

The poses of the fighters are given as 18 3D keypoints (eyes, ears, nose, neck, shoulders, elbows, wrists, hips, knees, and ankles) at 90 FPS (COCO-18 keypoint format for human pose skeleton). The fighters are detected even when they are surrounded by other people. (The screenshot is interactive, drag and play!)

The fighting stance averaged from the movements of the figther during the last 30 seconds. The strike detection stage sets apart moments when the fighter is sitting on his fighting stance from moments when he's trying something, and the former are averaged to estimate the current fighting stance.

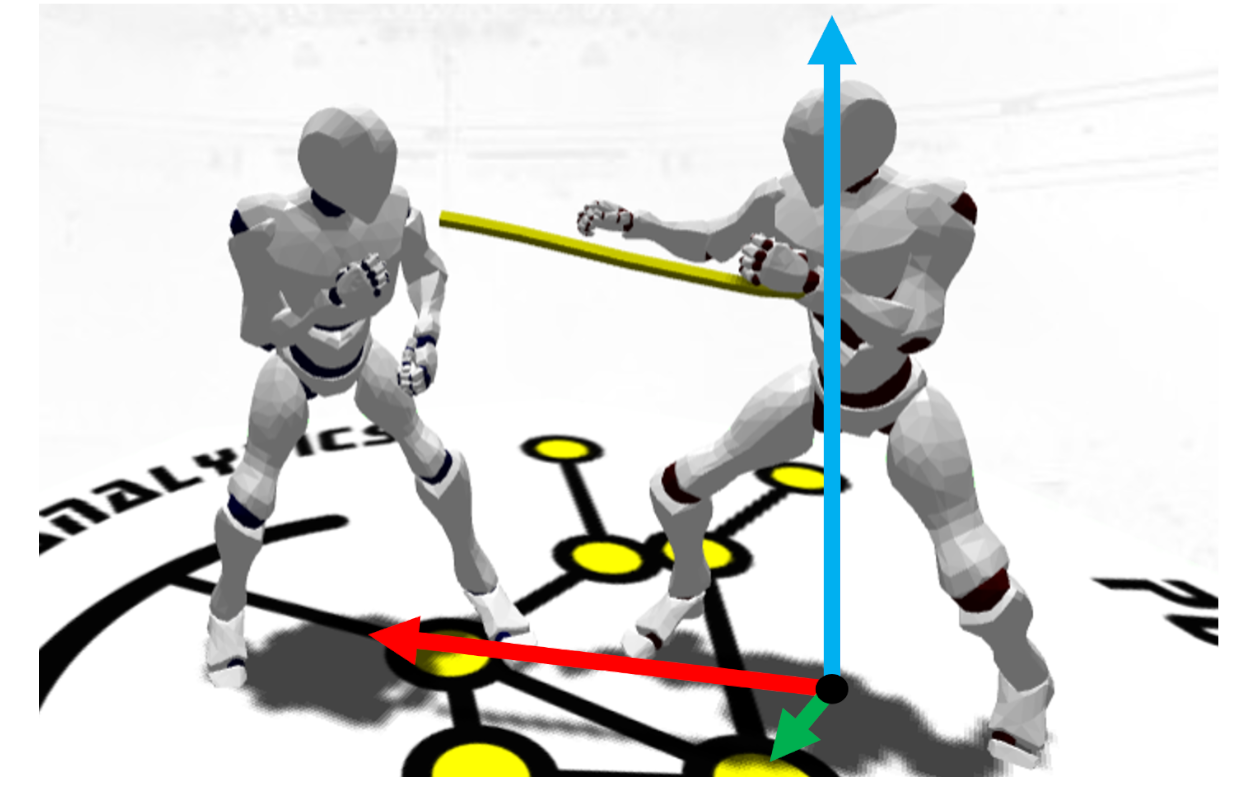

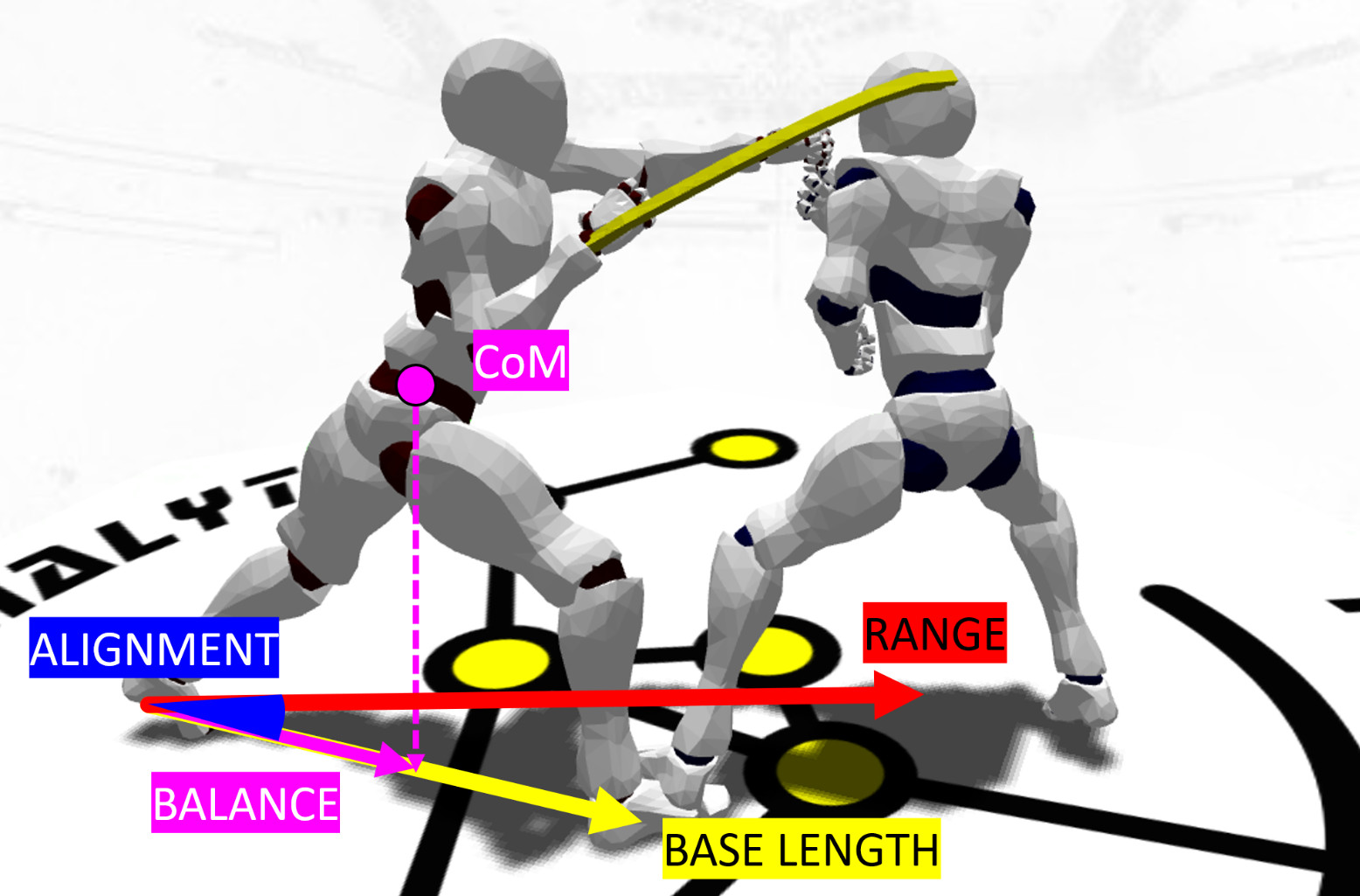

The analysis of tracking data (continous data) is complex, so we're going back to the basics, and evaluating the fighter's ability of finding the right range and placing himself properly before throwing a strike. When he does that, he's able to throw more and harder, and thus have an advantage over his opponent.

Range and positioning (base of support) are translated into a single value, called Footwork. It may be computed in a discrete fashion, by strike, or continuously, as the average of the last n strikes. The value is compared against models of optimal range and positining, and tell us the fighters' performance on managing the range and his stance during the fight.

As a way to understand how the fighter is protecting (or shielding) himself, the system follows and accumulates the movements of the fighters' arms and builds a visual "shield" around them. The shield lets us visualize how effective is the fighters' guard at any moment, and even highlights gaps that may be explored by their opponents.

A monitor of the fighters' movements during the fight, which is translated in a heatmap and charts that show how the fighters move away from their center of mass.

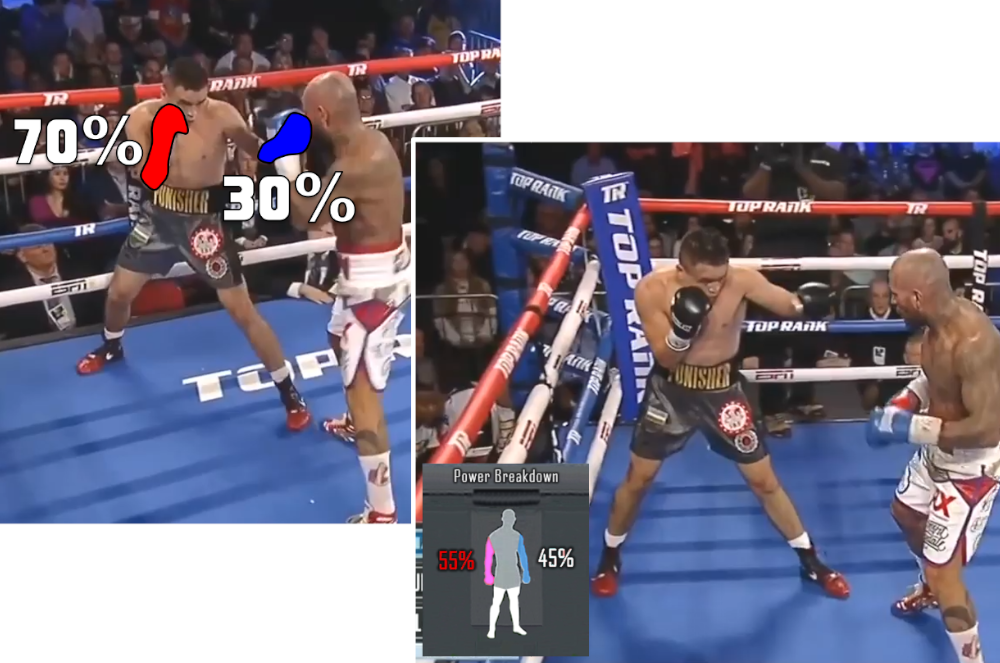

The power generated by each fighter, broken down by limb.

A 2D/3D mapping of the energy inflicted on the opponent's body, recording the frequency, hardest hit and accumulated damage on each sector of the body. Sectors may be defined in any granularity as we want, as well as the mapping - we can choose colors to represent the frequency of the hits and/or the accumulated power by sector, or highlight strategies of the fighters directly over the avatars.

Given the fact we have 3d poses at any given moment, we can drive virtual cameras to offer a side-view (or "street-fighter view") or even watch the fight from the perspective of the fighters, and give the viewer a first-person view of the strikes.

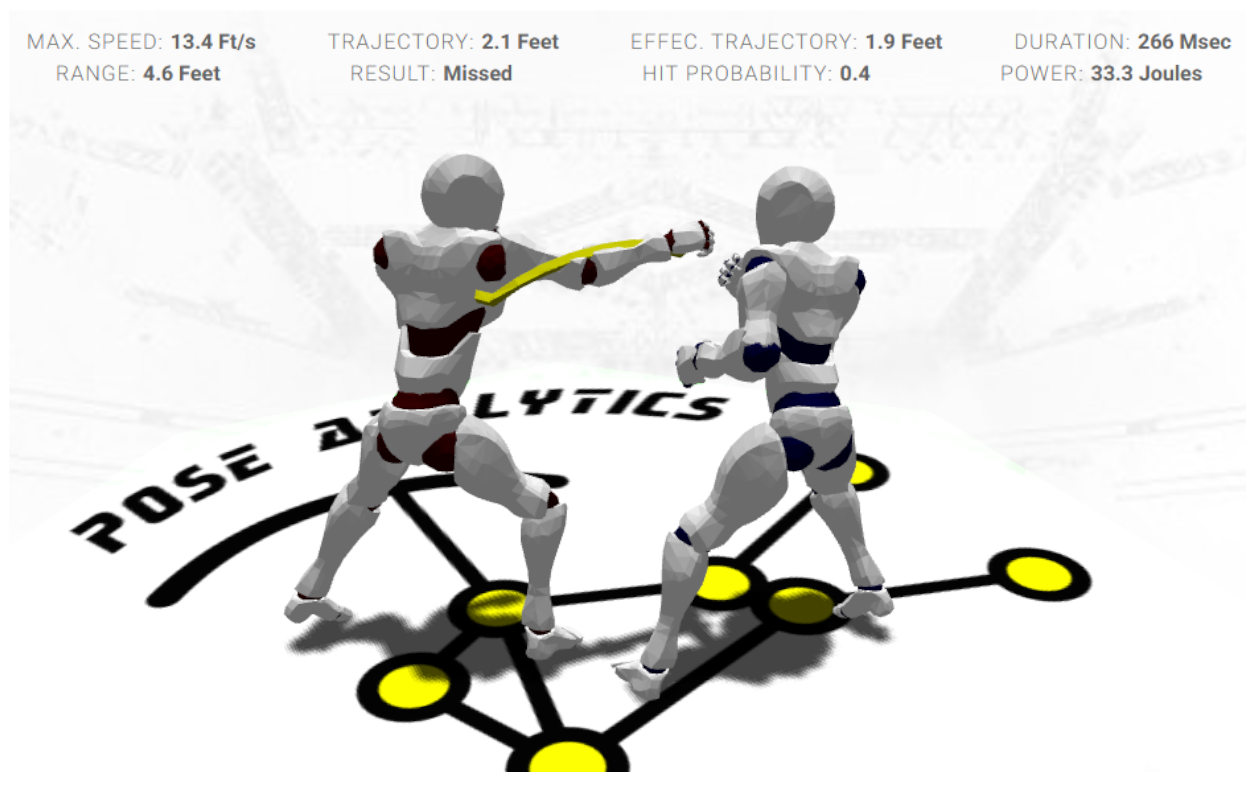

Each detected strike is packed in a web-friendly format (WebGL) to be visualized in real time. (The screenshot is interactive, drag and play!)

See it in actionThe input video is clipped and calibrated according to each detected strike, and the strike data is burnt on the clip automatically. (The screenshot is interactive, clic and play!)